Towards VR Accessibility for Blind and Low-Vision Users Through a Multimodal Approach

Virtual reality (VR) is rapidly maturing and has the potential to become the next generation of the Internet by facilitating more immersive collaboration, education, and social interaction. However, current VR technology is vision-centric, thereby marginalizing more than a billion people with visual impairments worldwide. The goal of this research is to promote VR accessibility by designing and developing software and hardware solutions that integrate multi-modal feedback, specifically 3D audio and on-body haptics, allowing blind individuals to explore VR spaces efficiently and immersively.

Project Team

Huaishu Peng (project lead), Jiasheng Li (student lead)

Project Outcomes

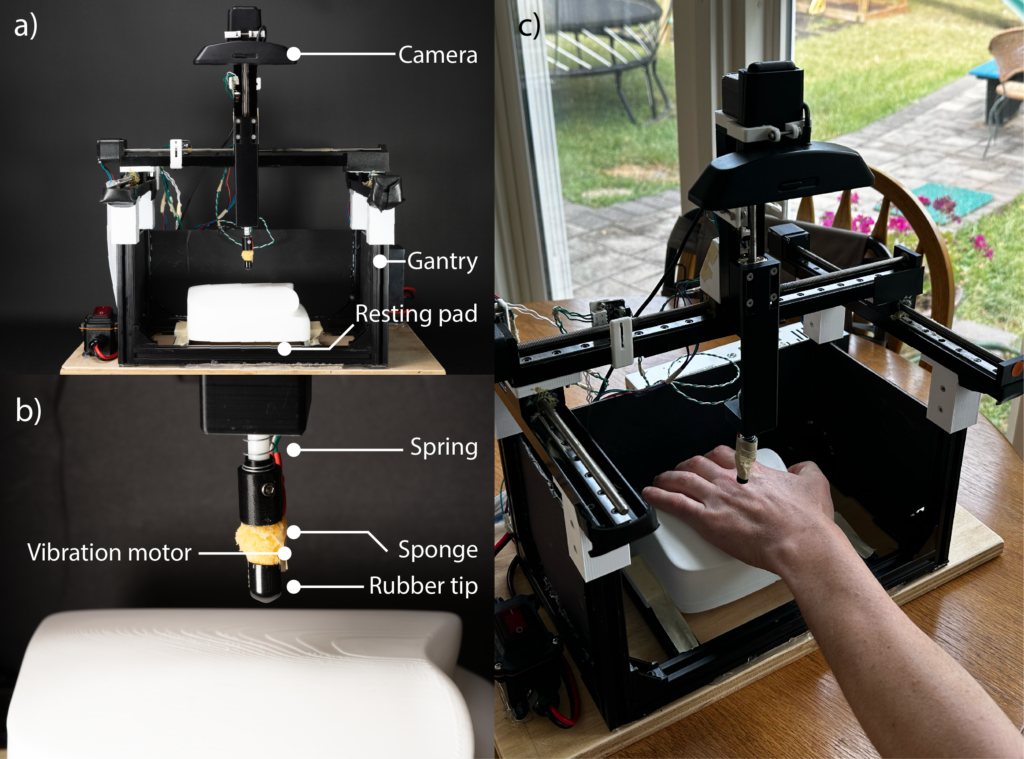

- The project has resulted in one full paper submission to IEEE VR 2025. The paper investigates the efficacy of two different haptic mechanisms, vibrotactile and skin-stretch, in delivering haptic notifications to blind users within an immersive VR context.

- Preliminary results have enabled the PI to submit a research grant proposal to the NIH, focusing on developing new technologies for VR accessibility.

A custom haptic device designed to render various haptic cues on the dorsal side of a blind participant: (a) Front view of the three-axis gantry system haptic device. (b) The touch probe. (c) Study conducted at a participant’s home.